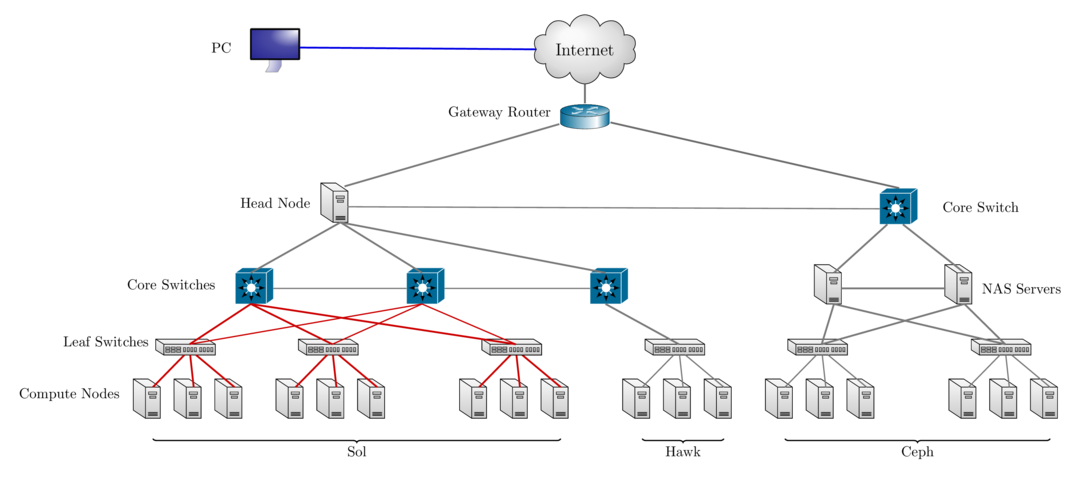

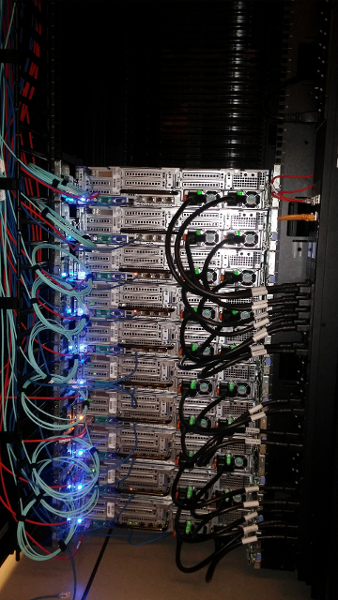

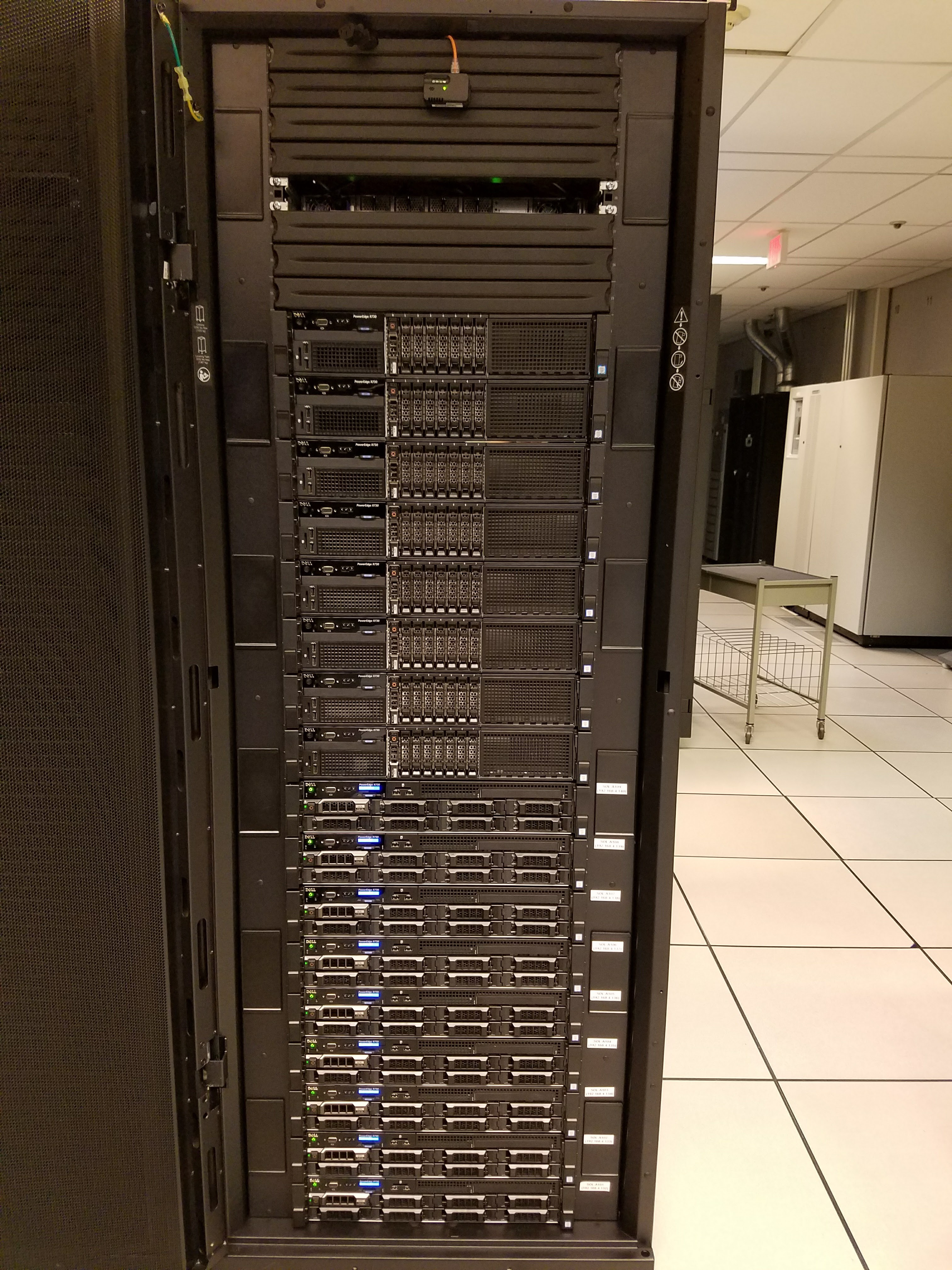

class: center, middle, inverse, title-slide # Research Computing Resources ## @ Lehigh University ### Library & Technology Services ### <a href="https://researchcomputing.lehigh.edu" class="uri">https://researchcomputing.lehigh.edu</a> --- class: myback # About Us? * Who? * Unit of Lehigh's Library & Technology Services - Our Mission - We enable Lehigh Faculty, Researchers and Scholars achieve their goals by providing various computational resources; hardware, software, and storage; consulting and training. * Research Computing Staff * __Alex Pacheco, Manager & XSEDE Campus Champion__ * Steve Anthony, System Administrator * Dan Brashler, CAS Computing Consultant * Sachin Joshi, Data Analyst & Visualization Specialist * Volunteer Staff * Keith Erekson, SET/System Architect * Jeremy Mack, DST/Geospatial Data and Visualization Specialist --- # What do we do? * Hardware Support * Provide system administration and support for Lehigh's HPC clusters. * Assist with purchase, installation and administration of servers and clusters. - Data Storage - Provide data management services including storing and sharing data. * Software Support * Provide technical support for software applications, install software as requested and assist with purchase of software. - Training & Consulting - Provide education and training programs to facilitate use of HPC resources and general scientific computing needs. - Provide consultation and support for code development and visualization. --- class: inverse, middle # What is HPC? # Who uses it? --- # Background and Defintions * Computational Science and Engineering (CSE) * Gain understanding, mainly through the analysis of mathematical models implemented on computers. * Construct mathematical models and quantitative analysis techniques, using computers to analyze and solve scientific problems. * Typically, these models require large amount of floating-point calculations not possible on desktops and laptops. - High Throughput Computing (HTC): use of many computing resources over long periods of time to accomplish a computational task. - large number of loosely coupled tasks * High Performance Computing (HPC): needing large amounts of computing power for short periods of time. * tightly coupled parallel jobs. - Supercomputer: a computer at the frontline of current processing capacity. --- # Why use HPC? * HPC may be the only way to achieve computational goals in a given amount of time * **Size**: Many problems that are interesting to scientists and engineers cannot fit on a PC usually because they need more than a few GB of RAM, or more than a few hundred GB of disk. * **Speed**: Many problems that are interesting to scientists and engineers would take a very long time to run on a PC: months or even years; but a problem that would take a month on a PC might only take a few hours on a supercomputer. <br /> .center[ <img src="assets/img/irma-at201711_ensmodel_10-5PM.gif" alt="Irma Ensemble Model" width="200px"> <img src="assets/img/irma-at201711_model-10-5PM.gif" alt="Irma High Probability" width="200px"> <img src="assets/img/jose-at201712_ensmodel.gif" alt="Jose Ensemble Model" width="200px"> <img src="assets/img/jose-euro-sep11.png" alt="Jose Euro Model Track Forecast" width="210px"> ] --- # Parallel Computing * many calculations are carried out simultaneously. - based on principle that large problems can often be divided into smaller ones, which are then solved in parallel. * Parallel computers can be roughly classified according to the level at which the hardware supports parallelism. * Multicore computing * Symmetric multiprocessing * Distributed computing * Grid computing * General-purpose computing on graphics processing units (GPGPU) --- # What does HPC do? .pull-left[ * Simulation of Physical Phenomena * Storm Surge Prediction * Black Holes Colliding * Molecular Dynamics - Data analysis and Mining - Bioinformatics - Signal Processing - Fraud detection * Visualization .center[ <img src="assets/img/Isaac-Storm-Surge.jpeg" alt="Isaac Storm Surge" width="200px"> <img src="assets/img/Colliding-Black-Holes.jpeg" alt="Colliding Black Holes" width="170px">] ] .pull-right[ * Design * Supersonic ballute * Boeing 787 design * Drug Discovery * Oil Exploration and Production * Automotive Design * Art and Entertainment .center[ <img src="assets/img/Molecular-Dynamics.jpeg" alt="Molecular Dynamics" width="180px"> <img src="assets/img/Plane-Design.jpg" alt="Plane Design" width="180px">] ] --- # HPC by Disciplines * Traditional Disciplines * Science: Physics, Chemistry, Biology, Material Science * Engineering: Mechanical, Structural, Civil, Environmental, Chemical - Non Traditional Disciplines - Finance - Preditive Analytics - Trading - Humanities - Culturomics or cultural analytics: study human behavior and cultural trends through quantitative analysis of digitized texts, images and videos. --- class: inverse, middle # Research Computing Resources --- # Sol: Lehigh's Shared HPC Cluster <table> <thead> <tr> <th style="text-align:right;"> Nodes </th> <th style="text-align:left;"> Intel Xeon CPU Type </th> <th style="text-align:left;"> CPU Speed (GHz) </th> <th style="text-align:right;"> CPUs </th> <th style="text-align:right;"> GPUs </th> <th style="text-align:right;"> CPU Memory (GB) </th> <th style="text-align:right;"> GPU Memory (GB) </th> <th style="text-align:right;"> CPU TFLOPS </th> <th style="text-align:right;"> GPU TFLOPs </th> <th style="text-align:right;"> SUs </th> </tr> </thead> <tbody> <tr> <td style="text-align:right;"> 9 </td> <td style="text-align:left;"> E5-2650 v3 </td> <td style="text-align:left;"> 2.3 </td> <td style="text-align:right;"> 180 </td> <td style="text-align:right;"> 10 </td> <td style="text-align:right;"> 1024 </td> <td style="text-align:right;"> 80 </td> <td style="text-align:right;"> 5.7600 </td> <td style="text-align:right;"> 2.570 </td> <td style="text-align:right;"> 1576800 </td> </tr> <tr> <td style="text-align:right;"> 33 </td> <td style="text-align:left;"> E5-2670 v3 </td> <td style="text-align:left;"> 2.3 </td> <td style="text-align:right;"> 792 </td> <td style="text-align:right;"> 62 </td> <td style="text-align:right;"> 4224 </td> <td style="text-align:right;"> 496 </td> <td style="text-align:right;"> 25.3440 </td> <td style="text-align:right;"> 15.934 </td> <td style="text-align:right;"> 6937920 </td> </tr> <tr> <td style="text-align:right;"> 14 </td> <td style="text-align:left;"> E5-2650 v4 </td> <td style="text-align:left;"> 2.2 </td> <td style="text-align:right;"> 336 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 896 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 9.6768 </td> <td style="text-align:right;"> 0.000 </td> <td style="text-align:right;"> 2943360 </td> </tr> <tr> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> E5-2640 v3 </td> <td style="text-align:left;"> 2.6 </td> <td style="text-align:right;"> 16 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 512 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0.5632 </td> <td style="text-align:right;"> 0.000 </td> <td style="text-align:right;"> 140160 </td> </tr> <tr> <td style="text-align:right;"> 24 </td> <td style="text-align:left;"> Gold 6140 </td> <td style="text-align:left;"> 2.3 </td> <td style="text-align:right;"> 864 </td> <td style="text-align:right;"> 48 </td> <td style="text-align:right;"> 4608 </td> <td style="text-align:right;"> 528 </td> <td style="text-align:right;"> 41.4720 </td> <td style="text-align:right;"> 18.392 </td> <td style="text-align:right;"> 7568640 </td> </tr> <tr> <td style="text-align:right;"> 6 </td> <td style="text-align:left;"> Gold 6240 </td> <td style="text-align:left;"> 2.6 </td> <td style="text-align:right;"> 216 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1152 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 10.3680 </td> <td style="text-align:right;"> 0.000 </td> <td style="text-align:right;"> 1892160 </td> </tr> <tr> <td style="text-align:right;"> 2 </td> <td style="text-align:left;"> Gold 6230R </td> <td style="text-align:left;"> 2.1 </td> <td style="text-align:right;"> 104 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 768 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 4.3264 </td> <td style="text-align:right;"> 0.000 </td> <td style="text-align:right;"> 911040 </td> </tr> <tr> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 89 </td> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #643700 !important;"> </td> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #643700 !important;"> </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 2508 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 120 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 13184 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 1104 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 97.5104 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 36.896 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 21970080 </td> </tr> </tbody> </table> - built by investments from Provost<sup>a</sup> and Faculty. - 87 nodes interconnected by 2:1 oversubscribed Infiniband EDR (100Gb/s) fabric. - Only 1.40M SUs from Provost investment available to Lehigh researchers. .footnote[ a: 8 Intel Xeon E5-2650 v3 nodes invested by Provost. ] --- # Condo Investments * Sustainable model for High Performance Computing at Lehigh. * Faculty (Condo Investor) purchase compute nodes from grants to increase overall capacity of Sol. * LTS will provide for four years or length of hardware warranty purchased, * System Administration, Power and Cooling, User Support for Condo Investments. * Condo Investor * receives annual allocation equivalent to their investment for the length of investment, * can utilize allocations on all available nodes, including nodes from other Condo Investors, * allows idle cycles on investment to be used by other Sol users, * unused allocation will not rollover to the next allocation cycle, * Annual Allocation cycle is Oct. 1 - Sep. 30. --- # Condo Investors * Dimitrios Vavylonis, Physics (1 node) * Wonpil Im, Biological Sciences (37 nodes, 102 GPUs) * Anand Jagota, Chemical Engineering (1 node) * Brian Chen, Computer Science & Engineering (3 nodes) * Ed Webb & Alp Oztekin, Mechanical Engineering (6 nodes) * Jeetain Mittal & Srinivas Rangarajan, Chemical Engineering (13 nodes, 18 GPUs) * Seth Richards-Shubik, Economics (1 node) * Ganesh Balasubramanian, Mechanical Engineering (7 nodes) * Department of Industrial & Systems Engineering (2 nodes) * Paolo Bocchini, Civil and Structural Engineering (1 node) * Lisa Fredin, Chemistry (6 nodes) * Hannah Dailey, Mechanical Engineering (1 node) * College of Health (2 nodes) - Condo Investments: 81 nodes, 2348 CPUs, 120 GPUs, 20.57M SUs - Lehigh Investments: 8 nodes, 160 CPUs, 1.40M SUs --- # Hawk * Funded by [NSF Campus Cyberinfrastructure award 2019035](https://www.nsf.gov/awardsearch/showAward?AWD_ID=2019035&HistoricalAwards=false). - PI: Ed Webb (MEM). - co-PIs: Balasubramanian (MEM), Fredin (Chemistry), Pacheco (LTS), and Rangarajan (ChemE). - Sr. Personnel: Anthony (LTS), Reed (Physics), Rickman (MSE), and Takáč (ISE). * Compute - 26 nodes, dual 26-core Intel Xeon Gold 6230R, 2.1GHz, 384GB RAM. - 4 nodes, dual 26-core Intel Xeon Gold 6230R, 1536GB RAM. - 4 nodes, dual 24-core Intel Xeon Gold 5220R, 192GB RAM, 8 nVIDIA Tesla T4. * Storage: 798TB (225TB usable) - 7 nodes, single 16-core AMD EPYC 7302P, 3.0GHz, 128GB RAM, two 240GB SSDs (for OS). - Per node - 3x 1.9TB SATA SSD (for CephFS). - 9x 12TB SATA HDD (for Ceph). * Production: **Feb 1, 2021**. --- ### Hawk <table> <thead> <tr> <th style="text-align:right;"> Nodes </th> <th style="text-align:left;"> Intel Xeon CPU Type </th> <th style="text-align:left;"> CPU Speed (GHz) </th> <th style="text-align:right;"> CPUs </th> <th style="text-align:right;"> GPUs </th> <th style="text-align:right;"> CPU Memory (GB) </th> <th style="text-align:right;"> GPU Memory (GB) </th> <th style="text-align:right;"> CPU TFLOPS </th> <th style="text-align:right;"> GPU TFLOPs </th> <th style="text-align:right;"> SUs </th> </tr> </thead> <tbody> <tr> <td style="text-align:right;"> 26 </td> <td style="text-align:left;"> Gold 6230R </td> <td style="text-align:left;"> 2.1 </td> <td style="text-align:right;"> 1352 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 9984 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 56.2432 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 11843520 </td> </tr> <tr> <td style="text-align:right;"> 4 </td> <td style="text-align:left;"> Gold 6230R </td> <td style="text-align:left;"> 2.1 </td> <td style="text-align:right;"> 208 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 6144 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 8.6528 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 1822080 </td> </tr> <tr> <td style="text-align:right;"> 4 </td> <td style="text-align:left;"> Gold 5220R </td> <td style="text-align:left;"> 2.2 </td> <td style="text-align:right;"> 192 </td> <td style="text-align:right;"> 32 </td> <td style="text-align:right;"> 768 </td> <td style="text-align:right;"> 512 </td> <td style="text-align:right;"> 4.3008 </td> <td style="text-align:right;"> 8.10816 </td> <td style="text-align:right;"> 1681920 </td> </tr> <tr> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 34 </td> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #643700 !important;"> </td> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #643700 !important;"> </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 1752 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 32 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 16896 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 512 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 69.1968 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 8.10816 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 15347520 </td> </tr> </tbody> </table> -- ### Hawk and Lehigh's Investment in Sol <table> <thead> <tr> <th style="text-align:right;"> Nodes </th> <th style="text-align:right;"> CPUs </th> <th style="text-align:right;"> GPUs </th> <th style="text-align:right;"> CPU Memory (GB) </th> <th style="text-align:right;"> GPU Memory (GB) </th> <th style="text-align:right;"> CPU TFLOPS </th> <th style="text-align:right;"> GPU TFLOPs </th> <th style="text-align:right;"> SUs </th> </tr> </thead> <tbody> <tr> <td style="text-align:right;"> 8 </td> <td style="text-align:right;"> 160 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1024 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 5.8880 </td> <td style="text-align:right;"> 0.00000 </td> <td style="text-align:right;"> 1401600 </td> </tr> <tr> <td style="text-align:right;"> 34 </td> <td style="text-align:right;"> 1752 </td> <td style="text-align:right;"> 32 </td> <td style="text-align:right;"> 16896 </td> <td style="text-align:right;"> 512 </td> <td style="text-align:right;"> 69.1968 </td> <td style="text-align:right;"> 8.10816 </td> <td style="text-align:right;"> 15347520 </td> </tr> <tr> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 42 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 1912 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 32 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 17920 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 512 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 75.0848 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 8.10816 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #643700 !important;"> 16749120 </td> </tr> </tbody> </table> --- # Ceph Storage * LTS provides various storage options for research and teaching. * Some are cloud based and subject to Lehigh's Cloud Policy. * Research Computing provides a 1223TB (3465TB) storage system called [Ceph](https://go.lehigh.edu/ceph). * Including Hawk's storage, a combined 2019TB (572TB usable) Ceph storage system is available to Lehigh researchers.. * Ceph * based on the Ceph software, * in-house, built, operated and administered by Research Computing Staff, * located in the EWFM Data Center. * provides storage for Research Computing resources, * can be mounted as a network drive on Windows or CIFS on Mac and Linux. * [See Ceph FAQ](http://lts.lehigh.edu/services/faq/ceph-faq) for more details. * Research groups can * purchase a sharable project space @ $375/TB for 5 years, OR * annually request up to 5TB sharable project space in a proposal style request. * share project space with anyone with a Lehigh ID. --- # Network Layout Sol, Hawk & Ceph  --- # Sol .left-column[  ] .right-column[  ] --- # Sol .pull-left[  ] .pull-right[] --- # Sol .pull-left[] .pull-right[] --- # Data Transfer, ScienceDMZ and Globus * NSF funded [Science DMZ](https://www.nsf.gov/awardsearch/showAward?AWD_ID=1340992&HistoricalAwards=false) to improve campus connectivity to the national research cyberinfrastructure. * Upto 50TB storage is available on the Data Transfer Node (DTN) on ScienceDMZ. * Storage space is only for data transfer. Once transfer is complete, storage needs to be deleted from DTN. * Access to DTN with shell access provided on request. * [Globus](https://www.globus.org) is the preferred method to transfer data to and from NSF and DOE supercomputing centers. * hosted service that manages the entire operation, monitoring performance and errors, retrying failed transfers, correcting problems automatically whenever possible, and reporting status to keep you informed while you focus on your research. * [How to Use Globus at Lehigh?](https://researchcomputing.lehigh.edu/help/globus) * No special access required on DTN to transfer data via Globus. --- class: inverse, middle # Accounts & Allocations --- # Accounts * Lehigh Faculty, research staff and students can request an account on Sol/Hawk. * All account requests by staff and students need to be sponsored by a Lehigh Faculty and be associated with an active allocation. * A Lehigh faculty with no active allocation, can request an account for themselves and members of their group while requesting an allocation. * Accounts that do not have an active allocation for 6 months will be disabled. - NO BACKUPS. If your account is deleted, all data in your directories is also deleted. * Sharing of account is strictly prohibited i.e no accounts for a lab or "in" accounts allowed. <!-- # Resource Partitioning | Investigator | Compute (SU) | Storage (TB)| |:------------:|:-------:|:-------:| | Proposing Team | 7.67M | 85 | | OSG | 3.07M | 5 | | Lehigh General | 3.84M | 75 | | Provost DF | 0.77M | 20 | | LTS | | 30TB | - 50% to proposing team including - 300K SUs allocated for educational, outreach and training (EOT). - 20% of resources with Open Science Grid (Grant requirement) - 5% of compute and 20TB to be distributed at the Provost’s discretion. - 30TB to LTS’s R-Drive (provides all faculty up to 1TB of storage space) - 25% of compute and 75TB available Lehigh community through XSEDE style proposal. - reviewed by Research Computing Allocation Review Committee (RCARC) - 10% (380K) for small allocations similar to StartUp Allocations on XSEDE. - 90% (3.45M) for large allocations similar to Research Allocations on XSEDE. --> --- # Resource Management <!-- - Do away with charges for computing on Sol and add 1.4M SUs from Sol. --> | Investigator | Compute (SU) | Storage (TB)| Sol Compute (SU) | Total Compute (SU) | |:------------:|:------------:|:-----------:|:----------------:|:------------------:| | Proposing Team | 7.64M | 80 | | 7.64M | | DDF | 300K | 20 | 500K | 800K | | OSG | 3.07M | 5 | | 3.07M | | StartUp | 380K | 20 | 120K | 500K | | Research | 3.45M | 40 | 550K | 4M | <!-- - Director's Discretionary Fund (DDF) to allow HPC Manager to distribute compute and storage. --> - Faculty PIs can request allocation and storage based on their needs: - Trial: for researchers who want to try out Sol/Hawk (DDF). - StartUp: for researchers with small computing needs. - Research: for researchers with large computing or storage needs. - Education: for EOT purposes (DDF). ??? .pull-left[] .pull-right[] --- # Director's Discretionary Fund - The Director's Discretionary Fund enables distributing compute and storage with minimal oversight from the Allocation Committee. - Total of 800K SUs and 40TB of storage when combined with StartUp storage quota - Use Cases - Each PI receives a 1TB storage quota that is shared among all his/her sponsored users. - 200GB per active user is recommended and larger groups can requst additional space. - PIs who unneccessarily add inactive users to get a larger storage will have their storage reduced to default 1TB. - Such PIs will have their storage request reviewed and approved by allocation committee. - Trial Allocations: short term allocations designed for new users. - Education Allocations: for courses, workshops and other EOT purposes. - Excess SUs will roll over to StartUp --> Research. - Excess storage will roll over to Research. --- # Trial Allocations - Why? - There are research groups that may benefit from HPC resources. - Using HPC resources have a steep learning curve, this allows group to investigate if HPC resources fit their needs before committing to larger scale usage of HPC resources. - Trial Allocations have a maximum of 10K SUs per PI and a 6 month duration. - Total Available: 300K SUs, 20TB. - Requirement: Short abstract describing proposed work. - Optional: What is your eventual goal? - <em>This is both short-term computing for completing a project and also a trial to determine how well the resources will meet our future needs.</em> - <em>The purpose of this proposal is for my laboratory to evaluate use of HPC resources at Lehigh for the more computationally intensive steps of the workflow, highlighted in the figure below.</em> - Progress report will be required for renewals or subsequent allocation request. - Apply anytime at https://go.lehigh.edu/hpcalloctrial --- # Education Allocations - Developing the next generation workforce is a cornerstone of NSF campus research cyberinfrastructure grants. - Use of Hawk for education - course work and workshops, is strongly encouraged. - __Courses__: A minimum of 500 SU/student is recommended. - 50K SU max and 1TB Ceph space per course. - __Capstone projects__: are considered to be a single course and are eligible for only one allocation. - For additional allocations, please request a startup or research allocation - Duration: 2 weeks past the end of the semester. - __Workshops/Summer Programs__: Faculty, Departments, Institutes or Colleges will be eligible for allocations (max 10K) to support workshops and summer programs centered on scientific computing. - Duration: Length of the workshop or 2 weeks. - Total Available: 500K SUs - Apply at https://go.lehigh.edu/hpcallocedu --- # StartUp Allocations - Startup Allocations are small allocation requests (<25K) with a 1 year duration. - A faculty can be a PI or collaborator on only two active Startup Allocation at any time. - __Requirements__: Short abstract (min 500 words, 1 page max) with the description of the work and the amount of computing time requested. - __Amount Requested__: Include a justification for the amount requested (required for renewals) - <em>On the redacted cluster 30 grids took up to an average of 4 hours of wall clock time. For 63,676 grids, this would equate to 8,490 SUs. Given 6 experiments, this would total 50940 SU. With full AVX512 instructions enabled, Hawk should be twice as fast as redacted cluster, so that would total 25,470 SUs. Therefore I am requesting 25K SUs as part of a StartUp allocation.</em> - Reviewed & approved monthly by at least one allocation committee member. - Renewal requires progress report, publication list, grants/ awards and list of graduated students if any. - Apply anytime at https://go.lehigh.edu/hpcallocstartup --- # Research and Storage Allocations - For requests upto 300K SUs and/or storage up to 5TB. - Max allocation is 300K and 5TB per PI at any given time. - Requires a detailed proposal similar to XSEDE. Should contain - Abstract, Description of the project and how resources will be used. - Justify requested allocations based on benchmarks results. - Report of prior work done. - List of publications, presentations, awards and students graduated. - Non HPC PIs requesting storage allocations should also describe: - why other forms of storage are not suitable and any plans to backup data stored, and - plans to move/save the data if project is not renewed/approved next year. - Reviewed and Approved semi annually by allocation committee. - Allocations to begin on Jan1 and Jul 1 and last a year. - Call for proposals will be issued in May and Nov in the HPC mailing list. - Seminar on guidelines for requesting Research Allocations sometime in mid May. - Total time allocated is 4M annually or 2M for each semi annual request period. --- # Steering Committee | Name | College | |:----:|:-------:| | Wonpil Im (co-chair) | CAS | | Ed Webb (co-chair) | RCEAS | | Ganesh Balasubramanian | RCEAS | | Brian Chen | RCEAS | | Ben Felzer | CAS | | Lisa Fredin | CAS | | Srinivas Rangarajan | RCEAS | | Rosi Reed | CAS | | Seth Richards-Shubik | CoB | | Yue Yu | CAS | | | CoH | | | CoE | | Alex Pacheco | LTS | --- # Steering Committe Responsibility | Allocation Type | Max SUs | Max TB | Approval Authority | Request Window | Approval Timeline | |:-----:|:----:|:----:|:----:|:----:|:----:| | Trial | 10K | 1 | HPC | Rolling | 2-3 Business days | | StartUp & Trial Renewals | 25K | 1 | Two RCARC members | Rolling | 3-4 weeks | | Research/Storage | 300K | 5 | RCARC Committee | Every 6 months | Within a month of submission deadline | --- # Acknowledgement * In publications, reports, and presentations that utilize Sol, Hawk and Ceph, please acknowledge Lehigh University using the following statement: <span style="font-size:2em"> "Portions of this research were conducted on Lehigh University's Research Computing infrastructure partially supported by NSF Award 2019035" </span> --- class: inverse, middle # Software --- # Available Software * [Commercial, Free and Open source software](https://go.lehigh.edu/hpcsoftware). - Software is managed using module environment. - Why? We may have different versions of same software or software built with different compilers. - Module environment allows you to dynamically change your *nix environment based on software being used. - Standard on many University and national High Performance Computing resource since circa 2011. * How to use HPC Software on your [linux](https://confluence.cc.lehigh.edu/x/ygD5Bg) workstation? - LTS provides [licensed and open source software](https://software.lehigh.edu) for Windows, Mac and Linux and [Gogs](https://gogs.cc.lehigh.eu), a self hosted Git Service or Github clone. --- # Installed Software .pull-left[ * [Chemistry/Materials Science](https://confluence.cc.lehigh.edu/x/3KX0BQ) - **CRYSTAL17** (Restricted Access) - **GAMESS** - Gaussian (Restricted Access) - **OpenMolcas** - **NWCHEM** - **Quantum Espresso** - **VASP** (Restricted Access) * [Molecular Dynamics](https://confluence.cc.lehigh.edu/x/6qX0BQ) - **ESPResSo** - **GROMACS** - **LAMMPS** - **NAMD** <span class="tiny strong">__MPI enabled__</span> ] .pull-right[ * [Computational Fluid Dynamics](https://confluence.cc.lehigh.edu/x/BZFVBw) - Abaqus - Ansys - Comsol - **OpenFOAM** - OpenSees * [Math](https://confluence.cc.lehigh.edu/x/1QL5Bg) - Artleys Knitro (node restricted) - GNU Octave - Gurobi - Magma - Maple - Mathematica - MATLAB] --- # More Software .pull-left[ * *Machine & Deep Learning* - TensorFlow - SciKit-Learn - SciKit-Image - Theano - Keras * *Natural Language Processing (NLP)* - Natural Language Toolkit (NLTK) - Stanford NLP <span class="tiny">_[Python packages](https://go.lehigh.edu/python)_</span> ] .pull-right[ * [Bioinformatics](https://confluence.cc.lehigh.edu/x/y6X0BQ) - BamTools - BayeScan - bgc - BWA - FreeBayes - SAM Tools - tabix - trimmomatic - Trinity - *barcode_splitter* - *phyluce* - VCF Tools - *VelvetOptimiser*] --- #More Software .pull-left[ * Scripting Languages - Julia - Perl - [Python](https://go.lehigh.edu/python) - [R](https://confluence.cc.lehigh.edu/x/5aX0BQ) * [Compilers](https://confluence.cc.lehigh.edu/x/Sab0BQ) - GNU - Intel - JAVA - NVIDIA HPC SDK - CUDA * [Parallel Programming](https://confluence.cc.lehigh.edu/x/Sab0BQ#Compilers-MPI) - MVAPICH2 - MPICH - OpenMPI ] .pull-right[ * Libraries - ARPACK/BLAS/LAPACK/GSL - FFTW/Intel MKL/Intel TBB - Boost - Global Arrays - HDF5 - HYPRE - NetCDF - METIS/PARMETIS - MUMPS - PetSc - QHull/QRupdate - SuiteSparse - SuperLU - TCL/TK ] --- # More Software .pull-left[ * [Visualization Tools](https://confluence.cc.lehigh.edu/x/qan0BQ) - Atomic Simulation Environment - Avogadro - Blender - Gabedit - GaussView - GNUPlot - GraphViz - Paraview - POV-RAY - PWGui - PyMol - RDKit - VESTA - VMD - XCrySDen ] .pull-right[ * Other Tools - GNU Make/CMake - GDB/DDD - GIT - Intel Advisor/Inspector/Vtune Amplifier - [GNU Parallel](https://confluence.cc.lehigh.edu/x/B6b0BQ) - [Jupyter Lab/Notebooks](https://confluence.cc.lehigh.edu/x/G5JVBw) - [RStudio Server](https://confluence.cc.lehigh.edu/x/NpBVBw) - Scons - Singularity - SWIG - TMUX/GNU Screen - Valgrind/QCacheGrind - [Virtual Desktops](https://confluence.cc.lehigh.edu/x/g5BVBw) ] --- class: inverse, middle # Any Questions about Lehigh Resources? # Next: External Resources --- # XSEDE * The E<b>x</b>treme <b>S</b>cience and <b>E</b>ngineering <b>D</b>iscovery <b>E</b>nvironment (<strong>XSEDE</strong>) is the most advanced, powerful, and robust collection of integrated advanced digital resources and services in the world. * single virtual system that scientists can use to interactively share computing resources, data, and expertise. - Resources and services used by scientists and engineers around the world. * The five-year, $121-million project is supported by the National Science Foundation. * [Awarded 12 month extension through Aug. 31, 2022](https://www.xsede.org/-/nsf-awards-12-month-extension-for-xsede-2-0-project) . - XSEDE is composed of multiple partner institutions known as Service Providers or SPs, each of which contributes one or more allocatable services. * Resources include High Performance Computing (HPC) machines, High Throughput Computing (HTC) machines, visualization, data storage, testbeds, and services. --- # XSEDE Resources * Indiana University * [Jetstream 2](https://nsf.gov/awardsearch/showAward?AWD_ID=2005506&HistoricalAwards=false) * AMD Milan CPUs and NVIDIA Tensor Core GPUs, 8PFLOPs, and 18.5PB storage. * Pittsburgh Supercomputing Center (PSC) * [Bridges 2](https://www.psc.edu/bridges-2) * 488 AMD EPYC 7742 nodes, 128 cores/node, 256GB RAM (16 nodes with 512GB RAM). * 4 Intel Xeon Platinum 8260M nodes, 48 cores/node, 4TB RAM. * 24 Intel Xeon Gold 6248, 40 cores/nodes, 512GB RAM, 8 NVIDIA V100 SMX2. * [Neocortex](https://www.cmu.edu/psc/aibd/neocortex/) * 2 Cerebras CS-1 systems * 400,000 Sparse Linear Algebra Compute (SLAC) Cores, 18 GB SRAM on-chip memory, 9.6 PB/s memory bandwidth, 100 Pb/s interconnect bandwidth * HPE Superdome Flex HPC server * 32 x Intel Xeon Platinum 8280L, 28 cores, 24 TiB RAM, 32 x 6.4 TB NVMe SSDs, 24 x 100 GbE interfaces --- # XSEDE Resources * San Diego Supercomputing Center (SDSC) * [Expanse](https://www.sdsc.edu/services/hpc/expanse/) * 728 AMD EPYC 7742 nodes, 128 cpus/node, 256GB RAM. * 52 Intel Xeon Gold 6248 nodes, 40 cpus/node, 384GB RAM, 4 NVIDIA V100 SMX2 GPUs/node. * 4 AMD EPYC 7742 nodes, 128 cpus/node, 2TB RAM. * 12PB Lustre Storage * 7PB Ceph Storage * [Voyager](https://www.sdsc.edu/News%20Items/PR20200701_voyager.html) * innovative system architecture uniquely optimized for deep learning (DL) operations and AI workloads * three-year ‘test bed’ phase with a select set of research teams, * followed by a two-year phase, where the system will be more widely available using an NSF-approved allocation process. --- # XSEDE Resources - Purdue University - [Anvil](https://www.rcac.purdue.edu/compute/anvil/): - 1000 AMD Milan nodes, 128 cores/node, 256GB RAM. - 32 AMD Milan nodes, 128 cores/node, 1TB RAM. - 16 AMD Milan nodes, 128 cores/node, 256GB RAM, 4 NVIDIA A100. - University of Kentucky - [KyRIC](https://docs.ccs.uky.edu/display/HPC/KyRIC+Cluster+User+Guide) - 5 Intel Xeon E7-4820 v4, 40 cores/node, 3TB RAM dedicated to XSEDE - Texas A & M University - [FASTER](https://hprc.tamu.edu/faster/) - 180 dual Intel 32-core Ice Lake processors, - about 270 NVIDIA GPUs (40 A100 and 230 T4/A40/A10 GPUs). --- # XSEDE Resources - National Center for Supercomputing Applications (NCSA) - [Delta](http://www.ncsa.illinois.edu/news/story/nsf_awards_ncsa_10_million_for_deployment_of_delta): From 2021-11-01 - 124 dual AMD 64-core processors, 256GB RAM - 100 single AMD 64-core processors, 256GB RAM, 4x A100 GPUs - 100 single AMD 64-core processors, 256GB RAM, 4x A40 GPUs - 5 dual AMD 64-core processors, 2TB RAM, 8x A100 GPUs - 1 dual AMD 64-core processors, 2TB RAM, 8xM100 GPUs - Johns Hopkins University - [Rockfish](https://www.marcc.jhu.edu/rockfish-description/) - 368 Intel Cascade Lake 6248R, 48 cores/node, 192GB RAM - 10 Intel Cascade Lake 6248R, 48 cores/node, 1.5TB RAM - University of Delaware - [Darwin](https://dsi.udel.edu/core/computational-resources/darwin/) - 105 compute nodes with a total of 6672 cores, 22 GPUs, 100TB of memory, and 1.2PB of disk storage. --- #XSEDE Resources * Open Science Grid (OSG): * A virtual HTCondor pool made up of resources from the Open Science Grid. * High throughput jobs using a single core, or a small number of threads which can fit on a single compute node. * _NSF CC* Award requires allocating 20% of Hawk resources to OSG_. * Open Storage Network (OSN): * cloud storage resource, geographically distributed among several pods. * each pod hosts 1PB storage and is connected to R&E networks at 50 Gbps. * pods currently hosted at SDSC, NCSA, MGHPCC, RENCI, and Johns Hopkins University. * Cloud-style storage of project datasets for access using AWS S3-compatible tools like clone, cyberduck, or the AWS cli. * For storage allocations larger than 10TB and up to 300TB. --- # How do I get started on XSEDE? * Apply for an account at the [XSEDE Portal](https://portal.xsede.org). * no charge to get an XSEDE portal account. * portal account required to register for XSEDE Tutorials and Workshops. - To use XSEDE's compute and data resources, you need to have an allocation. - An allocation on a particular resource activates your account on that allocation. - Faculty and Researchers from US universities and federal research labs can serve as Principle Investigators (PIs). - A PI can add students to his/her allocations. * XSEDE also has a Campus Champion Program. * local source of knowledge about high-performance and high-throughput computing and other digital services, opportunities and resources. * can request start up allocations on all XSEDE resources to help local users with getting started on XSEDE resources. --- # XSEDE Allocations: StartUp * one of the fastest ways to gain access to and start using XSEDE-allocated resources. * recommended that all new XSEDE users begin by requesting Startup allocation. * Appropriate uses for Startup allocations include: * Small-scale computational activities * Application development by researchers and research teams * Benchmarking, evaluation and experimentation on the various resources * Developing a science gateway or other infrastructure software components * subject to startup limits per resource, no more than three computational resource - Requirements: - Project Abstract: describe the research objectives, explain briefly why the project needs access to XSEDE resources, state how the requested amounts were estimated, and note why the requested resources were selected. - CV: two page long in NIH or NSF format - Renewals: require progress report - [More information](https://portal.xsede.org/allocations/startup) --- # XSEDE Allocations: Education * for academic courses or training activities that have specific begin and end dates. * Instructors may request a single resource or a combination of resources. - allocation size limits as Startup requests. - limited to no more than three separate computational resources. * last for the duration of the semester or training course period; * may request a brief period (usually two weeks or less) after the semester or training course to allow completion of some activities. - A Principal Investigator (PI) may request separate Educational projects for different courses happening at the same time. * require an abstract, instructor's CV, a course syllabus, and a brief justification for the resources requested. - [More information](https://portal.xsede.org/allocations/education) --- # XSEDE Allocations: Research - *users are encouraged to request a StartUp Allocation prior to requesting a Research Allocation.* - *in order to obtain benchmark results for preparing Research Allocation.* _ *the lack of benchmark results on the resources requested will greatly increase the level of scrutiny from the panel.* * For projects that have progressed beyond the Startup phase, either in purpose or scale of computational activities, a Research request is appropriate. * Research requests are accepted and reviewed quarterly by the XSEDE Resource Allocations Committee (XRAC). .center[ | Submission Period | Allocation Begin Date | |:------------------:|:---------------------:| | Dec 15 thru Jan 15 | April 1 | | Mar 15 thru Apr 15 | July 1 | | Jun 15 thru Jul 15 | October 1 | | Sep 15 thru Oct 15 | January 1 | ] --- # Research Allocation Request * Eligibility: faculty members and researchers, including postdoctoral researchers, at a U.S.-based institution. * Investigators with support from any funding source, not just NSF, are encouraged to apply. * an individual investigator may have only one active Research project at a time. * PIs can request that other users be given accounts to use the allocation. - Required Components * [Main Document](https://portal.xsede.org/allocations/research#requireddocs-main): * 10 pages for requests < 10M SUs. * 15 pages for requests ≥ 10M SUs. * [Progress Report](https://portal.xsede.org/allocations/research#requireddocs-progress): 3 pages. * [Code Performance & Resource Costs](https://portal.xsede.org/allocations/research#requireddocs-codeperformance): 5 pages. * [Curriculum Vitae (CV)](https://portal.xsede.org/allocations/research#requireddocs-cv): 2 pages. * [References](https://portal.xsede.org/allocations/research#requireddocs-refs): No limit. * Optional - [Special Requirements](https://portal.xsede.org/allocations/research#requireddocs-reqs): 1 page. --- # Main Document Guidance * [Scientific Background and Support](https://portal.xsede.org/allocations/research#maindoc-scientificbackground) * succinctly state the scientific objectives that will be facilitated by the allocation(s). * [Research Questions](https://portal.xsede.org/allocations/research#maindoc-researchquestions) * identify the specific research questions that are covered by the allocation request. * [Resource Usage Plan](https://portal.xsede.org/allocations/research#maindoc-resourceusage) * The bulk of the Main Document should focus on the resource usage plan and allocation request. * **Inadequate justification for requested resources is the primary reason for most reduced or denied allocations.** * [Justifying Allocation Amounts](https://portal.xsede.org/allocations/research#maindoc-allocations) * combine quantitative parameters from the resource usage plan with basic resource usage cost information to calculate the allocation needed. * tabulate and calculate the costs for each resource and resource type. * [Resource Appropriateness](https://portal.xsede.org/allocations/research#maindoc-resourceappropriateness) * briefly detail the rationale for selecting the requested resources. * [Disclosure of Access to Other Compute Resources](https://portal.xsede.org/allocations/research#maindoc-accesstoresources) --- # National Science Foundation (NSF) * In addition to XSEDE, NSF also provides [Frontera](https://fronteraweb.tacc.utexas.edu/), a 38PFLOP supercomputer at the Texas Advanced Computing Center. * heterogenous Dell EMC system powered by Intel processors, interconnected by a Mellanox Infiniband HDR and HDR-100 interconnect. - 8008 compute nodes available. - Intel Xeon Platinum 8280 ("Cascade Lake"), 28 cores per socket, 56 cores per node. - 360 NVIDIA Quadro RTX 5000 GPUs, 128GB per node. - IBM POWER9-hosted system with 448 NVIDIA V100 GPUs, 256GB per node (4 nodes with 512GB per node). * 80% of the capacity of Frontera, or about 55 million node hours, will be made available to scientists and engineers around the country. - [Allocation Information](https://frontera-portal.tacc.utexas.edu/allocations/) - [Allocation Submission Guidelines](https://frontera-portal.tacc.utexas.edu/allocations/policy/) --- # Department of Energy (DOE) * Innovative and Novel Computational Impact on Theory and Experiment ([INCITE](https://www.doeleadershipcomputing.org/about/)) program open to researchers from academia, government labs, and industry. - proposals are accepted between mid-April and the end of June for up to three years. - [Argonne Leadership Computing Facility](https://www.alcf.anl.gov/) - Theta: 13.5M node hours, 11.69PFLOPs Cray XC40 system. - [Oak Ridge Leadership Computing Facility](https://www.olcf.ornl.gov/) - Summit: 16M node hours, 200 PFLOPs IBM Power9 system. - [Getting Started](https://www.doeleadershipcomputing.org/getting-started/) - [More Information](https://www.doeleadershipcomputing.org/proposal/call-for-proposals) * National Energy Research Scientific Computing ([NERSC](https://www.nersc.gov/)) Center at Lawrence Berkeley National Lab. - Mostly for DOE sponsored research. - [Allocation Information](https://www.nersc.gov/users/accounts/allocations/) - [Get Started](http://www.nersc.gov/users/accounts/allocations/first-allocation/) - Allocations will be reduced if not used within an allocation cycle. --- # National Center for Atmospheric Research (NCAR) * The Computational and Information Systems Laboratory ([CISL](https://www2.cisl.ucar.edu/)) provides large computing resources for university researchers and NCAR scientists in atmospheric and related sciences. * Cheyenne, a 5.34 PFlops HPC system, provides more than 1.2 billion core-hours for allocation each year. * __University Allocations__ * Large requests >400K SUs: * Requests accepted every six months, in March and September. * 220M SUs available in spring and fall. * Small requests <400K SUs: * U.S. university researchers who are supported by NSF awards can request a small allocation for each NSF award. * Requests accepted throughout the year and reviewed/awarded within a few business days. --- # NCAR - CISL (contd) * __Unsponsored Graduate Students and Postdocs__ * Small allocations available, * no NSF award or panel review is required, * must work in the atmospheric or related sciences, * work does not lie within the scope of an associated NSF grant, and * do not have funding to pay for computer time. * __Classroom Allocation__ * Accounts are provided to individual students and the professor for assignments in numerical simulations, modeling, and studies of recently introduced computing architectures. * CISL can provide consulting assistance to the professor or teaching assistant. * __Climate Simulation Laboratory__ * funding from NSF awards to address the climate-related questions is required. * submission deadline isusually in Spring. * minimum request is 20M SUs. --- class: inverse, middle # Research Computing Services --- # HPC Seminars * Thursday, 2:00PM - 4:00PM via Zoom * Register at https://go.lehigh.edu/hpcseminars | Date | Topic | |:-----|:------| | Feb. 3 | Research Computing Resources at Lehigh | | Feb. 10 | Linux: Basic Commands & Environment | | Feb. 17 | Using SLURM scheduler on Sol | | Feb. 24 | Python Programming | | Mar. 3 | R Programming | | Mar. 10 | Introduction to Open OnDemand | | Mar. 17 | Data Visualization with Python | | Mar. 24 | Data Visualization with R | | Mar. 31 | BYOS: Container on HPC resources | | Apr. 7 | Object Oriented Programming with Python | | Apr. 14 | Shiny Apps in R | --- # Workshops * We provide full day workshops on programming topics. - [Summer Workshops](https://go.lehigh.edu/hpcworkshops) - Modern Fortran Programming (Summer 2015, 2021) - C Programming (Summer 2015, 2021) - HPC Parallel Programming Workshop (Summer 2017, 2018, 2021) - Quantum Chemistry Workshop (Summer 2021) * We also host full day XSEDE workshops. - XSEDE HPC Monthly Workshop: OpenACC (Dec. 2014). - XSEDE HPC Summer BootCamp: OpenMP, OpenACC, MPI and Hybrid Programming (Jun. 2015 - 2019). - XSEDE HPC Monthly Workshop: Big Data (Nov. 2015, May 2017). --- # Proposal Assistance - LTS strongly recommends that researchers, early on, develop long-range plans for a sustainable form of data storage. - facilitate compliance with future grant applications - avoid the need to later reformat or migrate data to another storage option. * [Research Data Management Committee](https://libraryguides.lehigh.edu/researchdatamanagement) can help with writing DMP's for your proposal. * eMail us at data-management-group-list@lehigh.edu OR Contact * General information - Subject Librarians * Research Computing Resources & Services - Alex Pacheco * Data Security - Eric Zematis, Chief Information Security Officer * Data storage - Help Desk or your Computing Consultant - [Sample DMPs](https://confluence.cc.lehigh.edu/x/TYYuAg) - [Budget templates and LTS Facilities document](https://confluence.cc.lehigh.edu/x/FgL5Bg) --- # Getting Help * Issue with running jobs or need help to get started: * Open a help ticket: <http://lts.lehigh.edu/help> * See [guidelines for faster response](https://confluence.cc.lehigh.edu/x/KJVVBw) - Investing in Sol - Contact Alex Pacheco or Steve Anthony * More Information * [Research Computing](https://go.lehigh.edu/hpc) * [Research Computing Seminars](https://go.lehigh.edu/hpcseminars) * [Condo Program](https://confluence.cc.lehigh.edu/x/EgL5Bg) * [Proposal Assistance](https://confluence.cc.lehigh.edu/x/FgL5Bg) * [Data Management Plans](http://libraryguides.lehigh.edu/researchdatamanagement) * Subscribe * [Research Computing Mailing List](https://lists.lehigh.edu/mailman/listinfo/hpc-l) * [HPC Training Google Groups](mailto:hpctraining-list+subscribe@lehigh.edu) --- class: inverse middle # Thank You! # Questions?